Introduction

We present a novel SLAM dataset containing 4D radar data, LiDAR data and ground truth. It covers different challenging scenes. To the best of our knowledge, there are limited public automotive datasets incorporating 4D radars. We hope our dataset enables the development of 4D radar-based SLAM in the community.

Our 4D radar dataset is now available at LINK.

Figure 1. Example clip from our dataset with 4D radar points (white) and LiDAR points (colored).

Sensor Setup

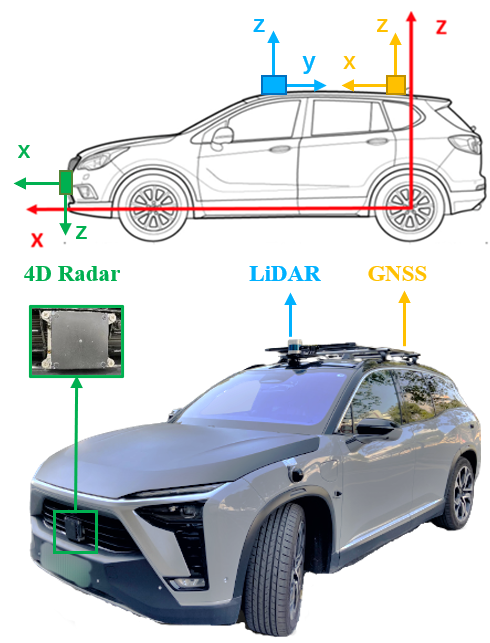

The vehicle we used to collect our 4D radar dataset is shown in Figure 2. It's equipped with a ZF FRGen21 4D Radar, a Hesai Pandar128 LiDAR, and a NovAtel GNSS integrated navigation system.

Figure 2. Our data collection platform.

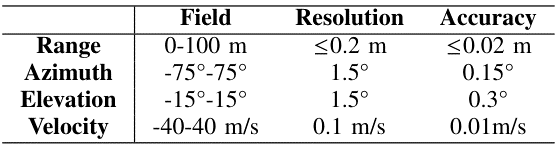

The 4D radar is mounted in the middle of the front bumper. It has 12 transmitting antennas and 16 receiving antennas to generate a total of 192 channels and works in the frequency band from 76 GHz to 77 GHz. It obtains a frame of point cloud including about 400 to 1400 points every 60ms. The main parameters are listed in Table 1.

Table 1. Specifications of the 4D radar

The LiDAR is mounted on the top of the vehicle. It scans at a rate of 10 Hz and gets 230400 points in each frame.

The GNSS system has a centimeter-level localization ability and provides the vehicle's ground truth pose in the Universal Transverse Mercator (UTM) coordinate system, at a high frequency of 50 Hz.

Data Collection

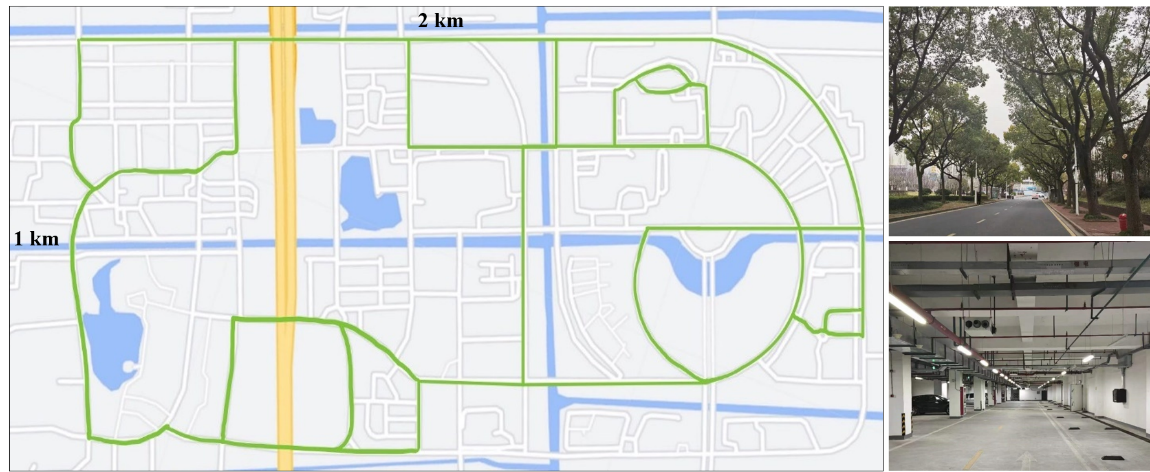

We collect the dataset in different challenging scenes, including an industrial zone and the campus of Shanghai Jiao Tong University. During the data collection process, the average driving speed is under 30 km/h. Almost all collected data sequences contain loop closures. In particular,

the scale of the industrial zone is small. The narrow roads and the walls on both sides will cause more radar reflection noise; while

the campus is much larger, as illustrated in Figure 3. It covers an area of 2 km × 1 km. The green lines indicate the driving trajectories over 20 km. And there are more trees and dynamic objects like cars and pedestrians, hence the radar point clouds are sparser and more unstable. Moreover, we have also collected indoor data involving more reflection noise in an underground parking lot. The algorithm performance is also evaluated therein.

Figure 3. Left: The map of the campus. Right: Some challenging environments including a road with many trees and an underground parking lot.

The LiDAR data is stored in PCAP files to save disk space. Other data including the 4D radar and the ground truth is recorded in ROS bag. For more details about our dataset and data conversion, please refer to README.md in the dataset folder. (Due to privacy policy, the data of the industrial zone is currently not available for public release.)

Citation

If you find the dataset useful in your research, please consider citing it as:

@ARTICLE{10173499,

author={Li, Xingyi and Zhang, Han and Chen, Weidong},

journal={IEEE Robotics and Automation Letters},

title={4D Radar-Based Pose Graph SLAM With Ego-Velocity Pre-Integration Factor},

year={2023},

volume={8},

number={8},

pages={5124-5131},

doi={10.1109/LRA.2023.3292574}}

Contact Us

This dataset is only available for non-commercial research purposes. If you have any questions, please contact us (email: lixingyi830@sjtu.edu.cn).

下一条:无